WUWT March 3, 2019

Guest opinion: Dr. Tim Ball

Two recent events triggered the idea for this article. On the surface, they appear unconnected, but that is an indirect result of the original goal and methods of global warming science. We learned from Australian Dr, Jennifer Marohasy of another manipulation of the temperature record in an article titled “Data mangling: BoM’s Changes to Darwin’s Climate History are Not Logical.” The second involved the claim of final, conclusive evidence of Anthropogenic Global Warming ((AGW). The original article appeared in the journal Nature Climate Change. Because it is in this journal raises flags for me. The publishers of the journal Nature created the journal. That journal published as much as it could to promote the deceptive science used for the untested AGW hypothesis. However, they were limited by the rules and procedures required for academic research and publications. This isn’t a problem if the issue of global warming was purely about science, but it never was. It was a political use of science for a political agenda from the start. The original article came from a group led by Ben Santer, a person with a long history of involvement in the AGW deception.

An article titled “Evidence that humans are responsible for global warming hits ‘gold standard’ certainty level” provides insight but includes Santer’s comment that “The narrative out there that scientists don’t know the cause of climate change is wrong,” he told Reuters. “We do.” It is a continuation of his work to promote the deception. He based his comment on the idea that we know the cause of climate change because of the work of the Intergovernmental Panel on Climate Change (IPCC). They only looked at human causes, and it is impossible to determine that, if you don’t know and understand natural climate change and its causes. If we did know and understand then forecasts would always be correct. If we do know and understand then Santer and all the other researchers and millions of dollars are no longer necessary.

So why does Santer make such a claim? For the same reason, they took every action in the AGW deception, to promote a stampede created by the urgency to adopt the political agenda. It is classic the sky is falling” alarmism. Santer’s work follows on the recent ‘emergency’ report of the IPCC presented at COP 24 in Poland that we have 12 years left.

One of the earliest examples of this production of inaccurate science to amplify urgency was about the residency time of CO2 in the atmosphere. In response to the claims for urgent action of the IPCC, several researchers pointed out that the levels and increase in levels were insufficient to warrant urgent action. In other words, don’t rush to judgement. The IPCC response was to claim that even if production stopped the problem would persist for decades because of CO2’s 100-year residency time. A graph produced by Lawrence Solomon appeared showing that the actual time was 4 to 6 years (Figure 1).

Figure 1

This pattern of underscoring urgency permeates the entire history of the AGW deception.

Lord Walter Scott said, “What a tangled web we weave when first we practice to deceive.” Another great author expanded on that idea but from a different perspective. Mark Twain said, “If you tell the truth you don’t have to remember.” In a strange way, they contradict or at least explain how the deception spread, persisted, and achieved their damaging goal. The web becomes so tangled and the connection between tangles so complicated that people never see what is happening. This is particularly true if the deception is about an arcane topic unfamiliar to a majority of the people.

All these observations apply to the biggest deception in history, the claim that human production of CO2 is causing global warming. The objective is unknown to most people even today, and that is a measure of the success. The real objective was to prove overpopulation combined with industrial development was exhausting resources at an unsustainable rate. As Maurice Strong explained the problem for the planet are the industrialized nations and isn’t it our responsibility to get rid of them. The hypothesis this generated was that CO2, the byproduct of burning fossil fuel, was causing global warming and destroying the Earth. They had to protect the charge against CO2 at all cost, and that is where the tangled web begins.

At the start, the IPCC and agencies supporting them had control over the two important variables, the temperature, and the CO2. Phil Jones expressed the degree of control over temperature in response to Warwick Hughes’ request for which stations he used and how they were adjusted in his graph, He received the following reply on 21, February 2005.

“We have 25 or so years invested in the work. Why should I make the data available to you, when your aim is to try and find something wrong with it.”

Control over the global temperature data continued until the first satellite data appeared in 1978. Despite the limitations, it provided more complete coverage; the claim is 97 to 98%. This compares with the approximately 15% coverage of the surface data.

Regardless of the coverage, the surface data had to approximate the satellite data as Figure 2 shows.

Figure 2

This only prevented changing the most recent 41 years of the record, but it didn’t prevent altering the historical record. Dr. Marohasy’s article is just one more illustration of the pattern. Tony Heller produced the most complete analysis of the adjustments made. Those making the changes claim, as they have done again in Marohasy’s challenge, that they are necessary to correct for instrument errors, site and situation changes such as for an Urban Heat Island Effect (UHIE). The problem is that the changes are always in one direction, namely, lowering the historic levels. This alters the gradient of the temperature change by increasing the amount and rate of warming. One of the first examples of such adjustments occurred with the Auckland, New Zealand record (Figure 3). Notice the overlap in the most recent decades.

Figure 3

The IPCC took control of the CO2 record from the start, and it continues. They use the Mauna Loa record and data from other sites using similar instruments and techniques as the basis for their claims. Charles Keeling, one of the earliest proponents of AGW, was recognized and hired by Roger Revelle at the Scripps institute. Yes, that is the same Revelle Al Gore glorifies in his movie An Inconvenient Truth. Keeling established a CO2 monitoring station that is the standard for the IPCC. The problem is Mauna Loa is an oceanic crust volcano, that is the lava is less viscous and more gaseous than continental crust volcanoes like Mt Etna. A documentary titled Future Frontiers: Mission Galapagos reminded me of studies done at Mt Etna years ago that showed high levels of CO2 emerging from the ground for hundreds of kilometers around the crater. The documentary is the usual, people are destroying the planet sensationalist BBC rubbish. However, at one point they dive in the waters around the tip of a massive volcanic island and are amazed to see CO2 visibly bubbling up all across the ocean floor.

Charles Keeling patented his instruments and techniques. His son Ralph continues the work at the Scripps Institute and is a member of the IPCC. His most recent appearance in the media involved an alarmist paper with a major error – an overestimate of 60%. Figure 4 shows him with the master of PR for the IPCC narrative, Naomi Oreskes.

Figure 4

Figure 5 shows the current Mauna Loa plot of CO2 levels. It shows a steady increase from 1958 with the supposed seasonal variation.

Figure 5

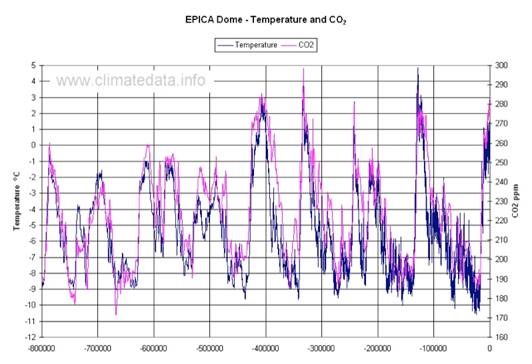

This increase is steady over 41 years, which is remarkable when you look at the longer record. For example, the Antarctic ice core record (Figure 6) shows remarkable variability.

Figure 6

The ice core record is made up of data from bubbles that take a minimum of 70 years to be enclosed. Then a 70-year smoothing average is applied. The combination removes most of the variability, and that eliminates any chance of understanding and predetermines the outcome.

Figure 7 shows the degree of smoothing. It represents a comparison of 2000 years of CO2 measures using two different measuring techniques. You can see the difference in variability but also in total atmospheric levels of approximately 260 ppm to 320 ppm.

Figure 7

However, we also have a more recent record that shows similar differences in variation and totals (Figure 8). It allows you to see the IPCC smoothed the record to control the CO2 record. The dotted line shows the Antarctic ice core record and how Mauna Loa was created to continue the smooth but inaccurate record. Zbigniew Jaworowski, an atmospheric chemist and ice core specialist, explained what was wrong with CO2 measures from ice cores. He set it all out in an article titled, “CO2: The Greatest Scientific Scandal of Our Time.” Of course, they attacked him, yet the UN thought enough of his qualifications and abilities to appoint him head of the Chernobyl nuclear reactor disaster investigation.

Superimposed is the graph of over 90,000 actual atmospheric measures of CO2 that began in 1812. Publication of the level of oxygen in the atmosphere triggered collection of the CO2 data. Science wanted to identify the percentage of all the gases in the atmosphere. They were not interested in global warming or any other function of those gases – they just wanted to obtain accurate data, something the IPCC never did.

Figure 8

People knew about these records decades ago. The record was introduced into the scientific community by railway engineer Guy Callendar in coordination with familiar names as Ernst-Georg Beck noted,

“Modern greenhouse hypothesis is based on the work of G.S. Callendar and C.D. Keeling, following S. Arrhenius, as latterly popularized by the IPCC.”

He deliberately selected a unique set of the data to claim the average level was 270 ppm and changed the slope of the curve from an increase to a decrease (Figure 9). Jaworowski circled the data he selected, but I added the trend lines for all the data (red) and Callendar’s selection (blue).

Figure 9

Tom Wigley, Director of the Climatic Research Unit (CRU) and one of the fathers of AGW, introduced the record to the climate community in a 1983 Climatic Change article titled, “The Pre-Industrial Carbon Dioxide Level.” He also claimed the record showed a pre-industrial CO2 level of 270 ppm. Look at the data!

The IPCC and its proponents established through cherry-picking and manipulation the pre-industrial CO2 level. They continue control of the atmospheric level through control of the Mauna Loa record, and they control the data on annual human production. Here is their explanation.

The IPCC has set up the Task Force on Inventories (TFI) to run the National Greenhouse Gas Inventory Programme (NGGIP) to produce this methodological advice. Parties to the UNFCCC have agreed to use the IPCC Guidelines in reporting to the convention.

How does the IPCC produce its inventory Guidelines? Utilising IPCC procedures, nominated experts from around the world draft the reports that are then extensively reviewed twice before approval by the IPCC. This process ensures that the widest possible range of views are incorporated into the documents.

In other words, they make the final decision about which data they would use for their reports and as input to their computer models.

This all worked for a long time, however, as with all deceptions even the most tangled web unravels. They continue to increase the atmospheric level of CO2 and then confirm it to the world by controlling the Mauna Loa annual level. However, they lost control of the recent temperature record with the advent of satellite data. They couldn’t lower the CO2 data because it would expose their entire scam, they are on a treadmill of perpetuating whatever is left of their deception and manipulation. All that was left included artificial lowering of the historical record, changing the name from global warming to climate change, and producing increasingly threatening narratives like the 12 years left and Santer’s certainty of doom.

NOTE: In my opinion, I do not give the work of Ernst-Georg Beck in Figure 8 any credence for accuracy, because the chemical procedure is prone to error and the locations of the data measurements (mostly in cities at ground level) have highly variable CO2 levels. Note how highly variable the data is. – Anthony